Trustworthy and Responsible AI-Centric Test Engineering (TRACE)

Software Center

Funding Agency: Software Center

Principle Investigators: Gregory Gay, Eduard Enoiu (Mälardalen University)

Involved Lab Members: Gregory Gay, Francisco Gomes de Oliveira Neto

Other Researchers: Eduard Enoiu, Jean Malm (Mälardalen University)

Industrial Collaborators: Ericsson, Grudfos, Volvo AB, Volvo Car Corporation, Volvo Construction Equipment, Zenseact

Publications: (van Heijningen et al., 2024), (Ekström et al., 2026), (Enoiu et al., 2026)

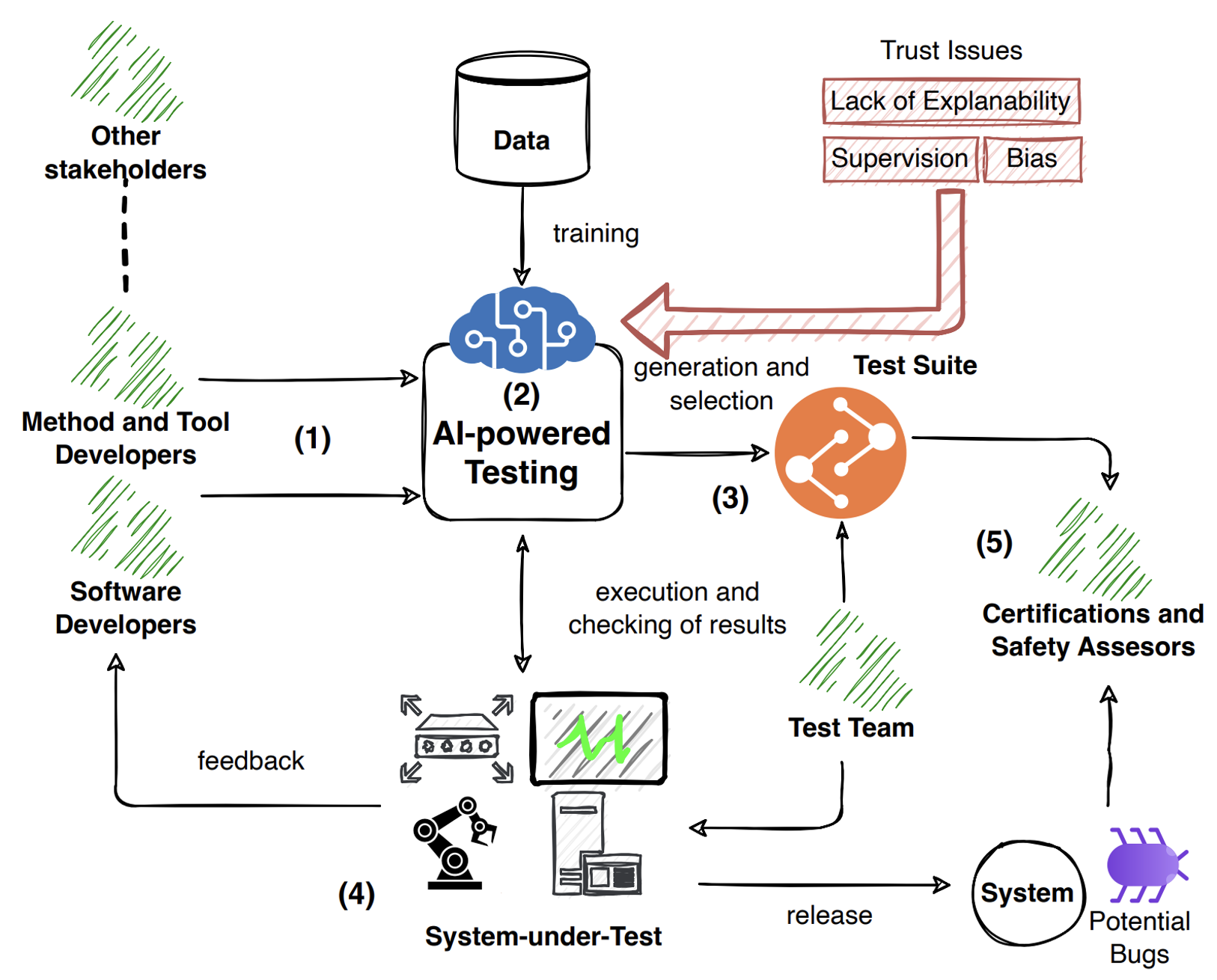

Software testing is, in general, a notoriously expensive and effort-intensive process. The integration of artificial intelligence and machine learning into the software testing and analysis processes offers a transformative approach to optimizing test case design, selection, execution, and maintenance.

AI and ML can be used to automate or support activities throughout the testing process, including the creation of new test cases, extension or maintenance of existing test cases, detection and re-creation of anomalies, analysis of system logs, suggestions on how to improve test suite quality, and prioritization of tests to execute in CI/CD pipelines.

Such support - commonly delivered either by fully or partially autonomous software agents or as part of an interactive human-in-the-loop process (e.g., where developers and the tool “chat” with each other) - could lead to major reductions in cost to develop - and major improvements in the resulting quality - of the complex software that powers our society. This support could play a critical role in reducing the cost of testing and in ensuring that developers can focus on critical challenges.

However, there are also tremendous risks in improperly integrating AI/ML tools into the testing process. AI-based prediction models can introduce challenges such as unpredictability and a lack of explainability. Given the mission-critical nature of the tested systems, these tools must be effective, transparent, unbiased, and aligned with human needs and requirements.

The goal of this project is to explore, investigate, implement, and evaluate a trustworthy, human-centric AI-driven testing process. Our vision is that such a process:

- Uses AI and ML to augment, rather than replace, developers.

- Enables developers to make effective, data-driven decisions during testing.

- Offers trustworthy, repeatable, and explainable results.

This project is an umbrella for multiple related sub-projects. The topics currently under investigation inclide:

- LLM-enabled fault localization.

- Generation of human-like test cases using AI/ML techniques.

- Exploration of synergies between human and automated test creation.

- Investigation of cognitive and human factors to improve test automation.

- Integration of automated testing tools into the developer workflow.

- Investigation of trust challenges in test automation.

- Integration of visualization and data analysis techniques to support testing.