Exploring the Integration of Large Language Models in Industrial Test Maintenance Processes

WASP Industrial Ph.D.

Funding Agency: Wallenberg Autonomous Systems, AI, and Software Program (WASP)

Call: Industrial Ph.D. Student Positions 2024

Principle Investigator: Gregory Gay

Involved Lab Members: Gregory Gay, Nasser Mohammadiha, Jingxiong (Roy) Liu

Industrial Collaborator: Ericsson

Publications: (Liu et al., 2025).

Software testing is a crucial component of the development process. It is also notoriously expensive. Although there is an initial cost associated with creating tests, much more cost is imposed by the need for test maintenance. Automated software tools can reduce the cost and improve the quality of test maintenance. However, despite its importance and cost, there has been little research on automation of test maintenance.

In this project, we focus on the potential of large language models (LLMs) to provide such support. LLMs are an emerging technology skilled at language analysis and transformation tasks such as translation, summarizing, and decision support due to their ability to infer semantic meaning from both natural language and software code. As a result, LLMs have shown promise in automating development and testing tasks, including test creation, documentation, and debugging.

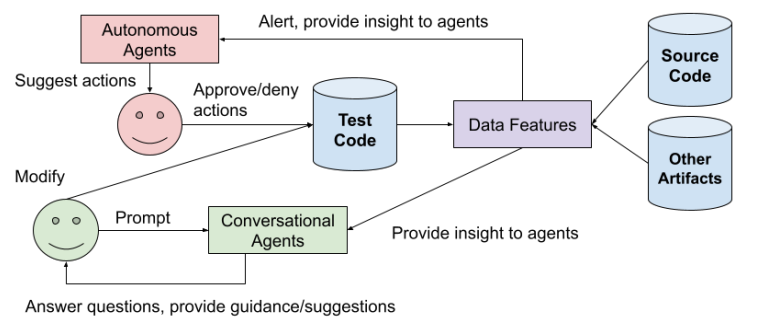

The purpose of this research is to develop and integrate LLMs and LLM agents - autonomous agents coupling an LLM with tool access and memory mechanisms - into the test maintenance process. We will design and investigate the strengths and limitations of LLM agents that provide two forms of support. First, we will explore how LLM agents can automate maintenance tasks, such as the creation or modification of test code. This stage includes both human-on-the-loop scenarios - where humans review the actions taken by LLMs agents before they are committed to the code repository – and full automation – where LLM agents take unsupervised action. Second, we will explore human-in-the-loop scenarios where autonomous agents serve as conversational assistants, providing targeted suggestions or guidance in response to active queries from the developers.

This research will be conducted by an industrial Ph.D. student at Ericsson AB and Chalmers University of Technology. We, the collaborators in this project, recently performed an exploratory case study to explore potential applications of LLM agents in test maintenance. Based on our results, we have identified fundamental research questions in relation to clear industrial challenges. We aim to provide general scientific solutions that will benefit both Ericsson and other companies developing critical software.